WebRTC Glossary

Lately, we’ve been asking a lot of questions about WebRTC, and we know many of you have been too. But with so many terms to unpack, it can be difficult to know where to start on your journey to ultra-low latency streaming. Let this WebRTC glossary be your guide.

We’ve taken the time to provide a high-level look at a variety of terms likely to come up in your WebRTC studies. Take some time to wrap your head around the big picture, then drill down into the provided links for a more detailed look at these key concepts.

Table of Contents

API

Application Programming Interface (API) is essentially a set of rules that lead to a specific outcome. Out-of-the-box WebRTC uses a set of JavaScript APIs, but those aren’t your only option. Many cloud-based streaming solutions couple APIs with their interface.

Application Server

Application servers effectively provide web hosting. In the case of WebRTC streaming, the application server is where the WebRTC application is hosted. These servers are essential any time you seek to host WebRTC online.

Audio Codecs

This is exactly what it sounds like – codecs that encode (compress) raw audio media files for transport across a network and decode (decompress) those same files for playback on the end-user device. WebRTC uses two different audio codecs, Opus and G.711, both of which are discussed in more depth in their glossary sections.

AV1

AOM Media Video 1 (AV1) is one of several possible video codecs for WebRTC streaming. Since its inception in 2018, it’s grown in popularity. However, it has historically been difficult to transcode in real time, making it less than popular for live streaming solutions. That said, some WebRTC workflows do utilize this video codec, including Cisco, Google, and Wowza’s own Real-Time Streaming at Scale.

Bandwidth Estimation

This is the process by which WebRTC can predict the available bandwidth for a given end-user device. It then encodes raw media data at an appropriate bitrate to promote a good balance of quality and reliability. Some workflows, like WebRTC simulcasting and SVC, interrupt this process by placing a middleman between the publisher and the viewers.

Bitrate

This is exactly what it sounds like: the rate of bits being sent over a network. This is measured in bits per second (bps). A higher bitrate leads to a higher quality stream but also requires more bandwidth. If the bitrate exceeds the bandwidth (also expressed in bps), then you get buffering. WebRTC employs constant bitrate (CBR) by default, meaning it will not change over the duration of a stream.

Browser-Based Encoding

WebRTC was designed for browser-based encoding, which does not lend itself to larger broadcasts. This method is great for a simple stream, but anyone who wants to exercise more control over the encoding process will find that browser-based encoding falls short. New technologies, like Wowza’s Real-Time Streaming at Scale, overcome this limitation by making it possible to stream via any encoder, use RTMP ingest, or leverage a custom OBS integration.

Codec

Codec refers to the algorithm that encodes (compresses) and decodes (decompresses) raw media files for transport across a network. Because raw media files are too big to be sent all on their own, they need to be shrunk down for delivery using a codec. It’s worth noting that not all codecs are the same. They can differ in terms of compression, CPU required, and more. Additionally, in video streaming, audio and video data is packaged separately into audio and video codecs — but more on those in their glossary sections.

Encoder/Encoding

The process of encoding raw video files can be a little confusing with all of the terminology that gets thrown around. The easiest way to think of it is an encoder uses a codec to encode raw media files. Encoding is also sometimes referred to as compressing and is done to “package” data for transfer across a network.

Encryption

Encryption refers to the process by which data is secured over a network. WebRTC is inherently a secure protocol. However, this doesn’t mean its foolproof. Added encryption efforts will help to further secure your stream, preventing unauthorized access.

G.711

Alongside Opus, G.711 is the other audio codec used by WebRTC. G.711 can only be used for narrowband streaming.

ICE Protocol

The Interactive Connectivity Establishment (ICE) protocol works in concert with the STUN and TURN protocols (see sections on STUN and TURN servers) to facilitate NAT traversal. It collects and exchanges IP addresses using the Session Description Protocol (SDP). It then attempts to make connections between pairs of these addresses (ICE candidates) for streaming.

In-Browser Compositing

In-browser compositing allows you to make a composite stream utilizing a variety of sources. It’s sort of like video editing a live stream right in your browser. This doesn’t have to be as complicated as it sounds if you go through the right API.

Interactivity

Quite simply, this is the ability that some live streams have to support two-way engagement with audiences. Examples include live streamed auctions, betting, educational streams that invite viewer questions and feedback, esports applications using viewer comments, etc. In order for a stream to be truly interactive, it needs be near real time in latency. We call this ulta-low latency or sub-second latency. Interactive streams that fail to meet this standard will negatively affect the live stream experience and nullify the interactive nature of it.

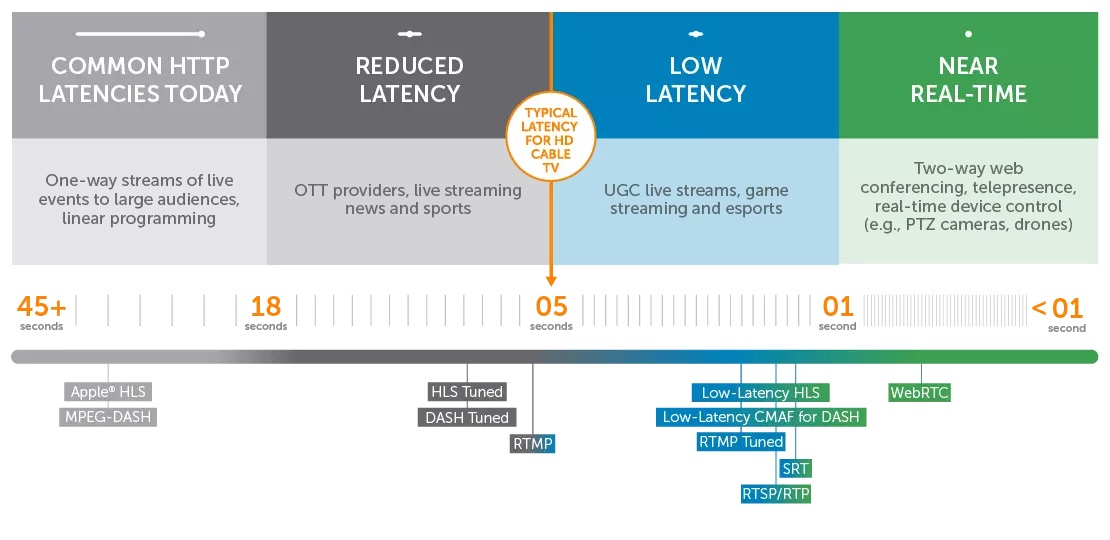

Latency

Latency refers to the time it takes for data to travel across a network. The easiest way to understand this is in terms of live streaming. When you are watching a live stream, you are likely viewing events some 30 seconds after they actually happened. This means your stream has a latency of 30 seconds. High latency refers to large delays. Low latency refers to shorter delays, typically on the order of one to five seconds. Ultra-low latency, which is the main appeal of WebRTC, refers to sub-second latency and is considered as close to real-time as you’re going to get.

Media Server

Media servers process media for group calls and broadcasting. They are not typically necessary for one-to-one calls, so their main function in WebRTC is to facilitate one-to-many streams by relieving pressure on the publishing device and conserving resources. Selective Forwarding Units (SFU) are an example of a streaming media server used in WebRTC to facilitate simulcasting. Streaming media servers can also help with transcoding and transmuxing, both of which are discussed below.

Narrowband

This speech-only audio spectrum of 300 to 3400 Hz is extremely limited and available to WebRTC streamers through both the Opus and G.711 audio codecs.

NAT Device

A Network Address Translation (NAT) device allows for private IP networks on which unregistered IP addresses can access the internet. This is done in the spirit of IP address conservation. In other words, IP addresses are a limited commodity and now we’re trying to slow our roll before we run out completely. These private IP networks are also more secure, as they are not visible to the larger online community. The NAT device does this by obscuring the real IP address from the public. However, with more security comes more roadblocks for someone looking to establish a WebRTC streaming connection. That’s where NAT traversal servers come in.

NAT Traversal Servers

NAT traversal servers come in two types: STUN and TURN, both of which are addressed separately in this glossary. Both of them serve the same general purpose of “traversing” firewalls and NAT devices in order to establish connections between devices. However, they accomplish this task in different ways. In any case, STUN and TURN servers are lumped together under NAT Traversal Server as they tend to be a team, with the TURN server stepping up when the STUN server fails.

Opus

Appropriately named for an individual artist’s work, or body of work, especially in music, Opus is a popular audio codec commonly used in WebRTC streaming. It is the more popular (and more versatile) of the two mandatory audio codecs used by WebRTC: Opus and G.711. Opus can work with narrowband and wideband (more on these in their respective sections) and uses low bitrates with high resiliency.

Protocol

In your WebRTC explorations, you’re going to hear this term thrown around a lot. Put simply, a protocol is a set of rules for transmitting data across a network. There are many different kinds of protocols, and they work together to achieve a successful stream. Common protocols used by WebRTC include the STUN and TURN protocols used for NAT traversal and ICE protocol used in signaling.

RTC

Real-Time Communications (RTC) essentially describes interactive streaming (see section on interactivity). WebRTC enables the type of ultra-low latency required to make this possible.

RTMP

The Real-Time Messaging Protocol (RTMP) has long been the standard for live streaming. After Flash, for which it was developed, fell by the wayside, it continued to be the standard as an ingest protocol for its reliability, flexibility, and speed. Implementing RTMP as an ingest protocol for a WebRTC workflow is more accommodating to publishers who won’t have to convert their media. Instead, they can just send their data via RTMP to the media server, which takes care of the rest.

RTSP

The Real-Time Streaming Protocol (RTSP) establishes and maintains a connection between the source of raw data and the server. More than that, it’s known for allowing remote manipulation of the stream, including pause and play functionality. RSTP is the ingest protocol of choice for closed-circuit solutions, like surveillance systems. This protocol can be used in concert with WebRTC for real-time surveillance.

SFU

A Selective Forwarding Unit (SFU) server can act as a simple intermediary between WebRTC publishers and the end-user devices. It helps WebRTC get around issues related to scalability by facilitating both simulcasting and SVC workflows. SFU servers do not prepare the media files in any way. They simply take encoded media files from the sender and select which stream (or layer as in the case of SVC) to pass on to the viewers based on available bandwidth.

Signaling Protocols

Signaling protocols are the “messages” sent by a signaling server in order to establish a connection. WebRTC can use a handful of different signaling protocols, including XMPP, MQTT, and SIP over WebSocket. It’s also possible to use a proprietary signaling protocol for your unique WebRTC needs.

Signaling Server

A signaling server quite simply establishes and maintains the signal for a WebRTC connection using a signaling protocol. For example, in a call over a network, it helps the individual clients find and connect with each other and maintains that connection throughout the duration of the call. It then ends the connection when the call ends. How does it do this? Well, it sends a signal stating that a connection needs to be established. It’s basically WebRTC knocking at a client’s door and asking to be let in.

Simulcast

Not to be confused to traditional simulcasting, which involves streaming to multiple platforms simultaneously, WebRTC simulcasting is the process by which WebRTC prepares a few discrete versions of the raw media (at a few different bitrates) and sends those to a selective forwarding unit (SFU) server, which chooses which individual versions of the stream to send to which end-user device based on available bandwidth. This is an effective way to improve stream scalability, which has historically been a challenge for WebRTC.

STUN Server

Session traversal utilities for NAT (STUN) servers are one half of the NAT traversal pie. These servers require a public IP address for every device on a call, which they then use to route the call data through the firewall and NAT device, a process involving the STUN protocol. Honestly, the solution here is elegant in its simplicity. A device asks, “who am I” and the STUN server responds with that device’s IP address. But it’s not a failproof method, as the STUN server sometimes can’t get ahold of the answer.

SVC

Scalable Video Coding (SVC) is a WebRTC extension that allows an encoder to encode raw media data a single time but in multiple layers. It helps to think of this in contrast to WebRTC simulcasting. Picture three distinct streaming alternatives for the same media data sent independently to an SFU server for triaging. That’s simulcasting. Now picture the same publisher encoding a single stream with multiple bitrate options layered together (like a layer cake). That’s SVC at work. When being sent along to end-user devices, the SFU peels away layers of this cake as needed to achieve the appropriate bitrate for the given device.

Transcoding

Transcoding is when you take already encoded media files, unencode them, and alter them in some way. This can be done to change their size (transize) or bitrate (transrate) and is commonly done for the purposes of adaptive bitrate streaming (ABR), which seeks to prepare multiple chunks of a data stream at multiple bitrates and resolutions for dynamic streaming to a variety of devices.

Transmuxing

Often confused with transcoding, transmuxing also seeks to repackage encoded media files. However, it doesn’t decode these files; it simply converts the container file formats (i.e. the outside of the data package). Let’s say you are shipping pillows to a friend. Transcoding might involve opening the package and smooshing the pillows down so they can fit in a smaller box, then repackaging them in said smaller box. Transmuxing is just taking the package you have and slapping a fresh shipping label on it.

TURN Server

If the STUN server fails in its duties, the traversal using relays around NAT (TURN) server steps up. The TURN server uses the TURN protocol to route the necessary data around the firewall (as opposed to through). It does this by relaying the data you want to send, acting as an intermediary between you and the end-user devices. Of course, it manages this at the cost of a decent amount of bandwidth.

Video Codecs

Just as audio codecs are codecs for audio, video codecs are codecs for your raw video media files. These codecs encode (compress) raw video media files for transport across a network and decode (decompress) those same files for playback on the end-user device. Mandatory video codecs for WebRTC include VP8, VP9, AVC/H.264, and AV1.

| Codec Suitability | Live Origination | Live Transcode | Low Latency | 4K | HDR |

| H.264 | Excellent | Excellent | Excellent | Poor | Poor |

| VP9 | Poor | Poor | WebRTC | Excellent | Poor |

| HEVC | Good | Good | Nascent | Excellent | Excellent |

| AV1 | Nascent | Nascent | WebRTC | Excellent | Nascent |

VP9

As with WebRTC, VP9 is an open-sourced technology compliments of Google. It is a video codec that can be used with WebRTC and is the only video codec that works with scalable video coding (SVC) in WebRTC. VP9 is widely considered to be a successor to VP8. Read more about SVC in its designated section.

WebRTC Server

Technically, WebRTC doesn’t need a server in a peer-to-peer connection on a local area network. In these cases, the end-user device essentially acts as the server. However, there are numerous WebRTC workflows that require servers not accounted for in the out-of-the-box WebRTC solution, including anything that involves connecting over the internet. For example, a selective forwarding unit (SFU) server can facilitate WebRTC simulcast or SVC streams. Other servers for NAT traversal, signaling, application, and media, serve various purposes in facilitating communication between the publisher and end-user devices. We address each of these in their respective sections.

WHIP

Millicast developed the WebRTC-http ingestion protocol (WHIP) to improve the media ingest portion of the WebRTC workflow. Basically, during media ingest, WebRTC faced roadblocks to connectivity between encoding software and hardware and lacked a standard signaling protocol. WHIP resolves this by combining encoding hardware and software and packaging them with a standard signaling protocol. This standardization and simplification of media ingest also serves to “complete” WebRTC on the sender side, meaning it is now more easily supported.

Wideband

Also known as fullband, wideband refers to an audio spectrum of 300 to 7600 Hz. This is considered good quality and available to WebRTC streamers through the Opus audio codec.

Conclusion

While this list should give you a solid foundation in WebRTC related terminology, there is still a lot more ground to cover if you want to truly understand how WebRTC works and how to implement it for a wider audience. Stay tuned for our WebRTC deep dive, which will be available for free alongside our other reports and guides.