Video Developer Trends 2022

I recently attended two of my favorite events — Demuxed and the Foundations of Open Media Standards and Software (FOMS) workshop — in person. Each year, streaming engineers like myself gather at these conferences to touch base on where video technologies are headed and how the community can work together to address shared frustrations, emerging standards, and industry trends.

As a group, us video developers feel at home in an acronym-riddled, fast-moving, and complex space. Technologies deemed cutting-edge at one point (*cough* 1080p) can become passé in a matter of years. Likewise, budding technologies like 360° virtual reality (VR) and NFTs often generate early buzz — but then require quite some time to become commercially viable.

And this all makes sense. Video itself is 150 years young, with the history of streaming only dating back a few decades. That’s why conferences like Demuxed and FOMS are so crucial to keeping everyone in conversation as we build the future of video.

A handful of topics found their way into discussions at both developer gatherings, which I cover below. But first, check out the Spirit Halloween costume meme we created to personify our small but mighty cohort.

1. The Greening of Streaming

There’s been an increasing consciousness in recent years on the energy requirements associated with video distribution and the need for less wasteful practices. For example, if 95% of your viewers only ever view the highest bitrate option provided, then do you really need seven rungs on the encoding ladder or could you get away with just a handful? What it we had better metrics to help us make that decision?

With streaming becoming more prolific each day, and video accounting for 80% of total internet traffic, improving efficiencies just makes sense. What’s more, opportunities for doing so span the entire ecosystem.

At the source, there’s content-aware encoding to deliver better quality video at a lower bitrate. On the other end of the delivery pipeline, energy consumption is at its highest when consumers individually decode a single video stream across their myriad devices. Here, video analytics could better inform broadcasters by providing a carbon footprint estimation per streaming session based on device, display resolution, screen brightness, codecs used, and info from the content delivery networks (CDN) themselves.

2. Shifting Dynamics in Low-Latency Streaming

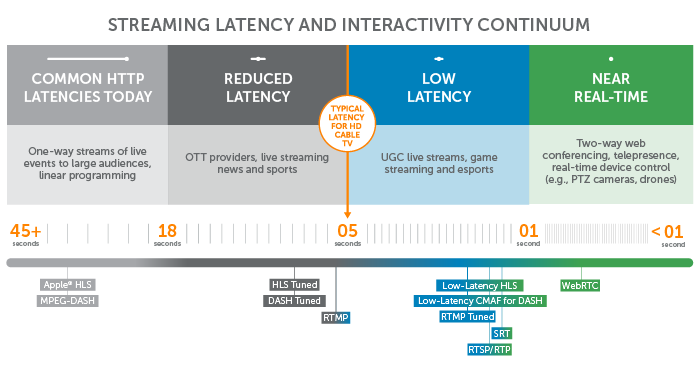

Unlike in years prior, Low-Latency HLS and low-latency CMAF for DASH weren’t hot topics at Demuxed and FOMS. I partly attribute this to the fact that three seconds of latency is becoming a no-man’s land. Content distributors either need the sub-second delivery critical to interactive video experiences, or they’re comfortable with the 6+ second (or maybe closer to 30-second) delay we’ve come to expect.

That means that when it comes to low-latency streaming, we’re back to focusing on Web Real-Time Communications (WebRTC) — which clocks in at sub-500 millisecond delivery.

“WebRTC truly is amazing,” said Dan Jenkins of Broadcaster VC during his talk at Demuxed, “but it also sucks.” He went on to discuss frustrations with the WebRTC browser APIs, inconsistent user experiences across Chrome and Safari, obstacles with developing WebRTC on mobile, and more.

And the truth is, the history between WebRTC and the broadcast world has been a bumpy one. The chat-based protocol simply wasn’t designed for large-scale streaming media. Likewise, because the real-time format lacks a standard signaling protocol, it hasn’t always been a great fit for content distributors wanting to stream produced live content with a professional encoder.

But that’s all changing.

The WebRTC HTTP Ingest Protocol (WHIP) now provides encoding software and hardware with a simple way to carry out the WebRTC negotiation process by using an HTTP post to exchange the SDP information with media servers, thus enabling WebRTC ingest across vendors. Likewise, Wowza’s Real-Time Streaming at Scale feature for Wowza Video uses a custom CDN to accommodate audiences of up to a million viewers while maintaining lightning-fast delivery.

WHIP-supported encoders today include the Osprey Talon 4K-SC, gStreamer, and Flowcaster — all of which can be paired with Wowza’s Real-Time Streaming at Scale solution to deliver high-quality and low-latency video delivery to viral audiences.

Yes, WebRTC is still a work in progress, but that’s part of its beauty. Software developers across the world are contributing code to the open-source framework daily, improving interoperability and functionality along the way. Will it ultimately be replaced by emerging standards like Media Over Quick (MoQ) or a combination of WebTransport and WebCodecs? Maybe, but that’s a blog for another day.

Get the Low-Latency Streaming Guide

Understand the critical capabilities required to provide interactive video experiences.

Download PDF3. Accessibility Requires Synthesizing Humans and Tech

From compliance and searchability to accessibility and watchability (e.g., the ability to consume a live stream with the volume off), video captioning is a crucial technology. Artificial intelligence and machine learning (AI/ML) have made impressive leaps in improving accessibility across the video landscape. But without built-in viewer feedback, these too often fall short.

Podium Media’s Leon Lyakovestsky gave a talk at Demuxed about combining automation technologies with a human touch to improve accessibility. He prefaced his talk with a surprising assertion: “If you’re watching a video for longer than a minute and you see no errors, you can thank the humans for that.”

Specifically, today’s workflows often use auto-generated speech-to-text technology, as well as human review, markup, and corrections to transcribe videos. Without this human part, accuracy falls in the 85-95% range. Not only would a 15% rate of error be frustrating for an end user to interpret — it would also fail to meet compliance requirements.

So how do we resource this step of the video captioning workflow? One possibility is to crowdsource quality assurance (QA) by tasking the actual viewers with adjusting incorrect captions. Like a Wikipedia-style approach to captioning, this user-powered strategy could improve overall user experience and empower any viewers eager to contribute (I know our copywriters at Wowza would struggle not to edit transcripts when given a chance).

Never miss a video dev update

Subscribe to get bi-weekly tech news and tutorials delivered to your inbox.

Subscribe4. Ongoing Push for Standardization and Integration

The streaming industry has always had a knack for making video delivery unnecessarily complex with its abundance of proprietary technologies and formats. From competing protocols that do the same thing (we’re looking at you HLS and DASH) to the challenges that come with stream monitoring and end-to-end visibility, standardization could solve a lot of video developers’ woes.

MPEG-DASH was itself designed to alleviate this pain point as was the common media application format (CMAF). The same is true of today’s open-source, royalty-free codecs like AOMedia Video 1 (AV1). Despite this, HLS remains the most popular protocol for delivery and the majority of encoded output today takes the form of royalty-bearing H.264/AVC. Things like patent pools, hardware support, metadata, and accessibility all tend to get their own twist with each new codec or protocol.

Another place we’re seeing a push for standardization is in streaming analytics. End-to-end video monitoring requires integration between the encoder, packager, content delivery network (CDN), and player, which is why video developers are often left in the dark about quality of experience.

Two standards, common media client data (CMDC) and common media server data (CMSD), are poised to improve communication and interoperability across the streaming workflow by normalizing metrics. When leveraged to their full potential, these technologies could empower CDNs to know if a player was rebuffering and then route viewers to a different point of presence (PoP). Likewise, CMSD could ensure traceability from origin out the client.

Conclusion

Across the industry, the consensus is clear: Video has been around long enough. Why can’t we all work together to make things less complex?

We share this viewpoint, and it’s one of the reasons Wowza released our integrated Wowza Video platform earlier this year. In addition to providing a single solution with observability from encode through playback, our video API offers the flexibility to integrate with external tools if desired.

We’re also dedicated to guiding video enthusiasts through the world of streaming and collaborating with others in the industry. To stay up to date on all the technology updates and industry trends we come across, don’t forget to subscribe to the Wowza blog.

FREE TRIAL

Live stream and Video On Demand for the web, apps, and onto any device. Get started in minutes.

- Stream with WebRTC, HLS and MPEG-DASH

- Fully customizable with REST and Java APIs

- Integrate and embed into your apps