Low-Latency Claims and How to Decipher Them

From ‘glass-to-glass latency’ to ‘near real time,’ streaming professionals like to throw around a lot of terms describing video delay. How do the different latency claims stack up and what should you be looking for? It all depends what you’re trying to achieve.

What Is Latency?

Latency describes the delay between when a video is captured and when it’s displayed on a viewer’s device. Passing chunks of data from one place to another takes time, so latency builds up at every step of the streaming workflow.

What Is Glass-to-Glass Latency?

Encoding. Transcoding. Delivery. Playback. Each stage can increase latency. For that reason, you’ll want to look for claims of end-to-end or glass-to-glass latency. This ensures that the value accounts for the comprehensive time difference between source and viewer. Other terms, like ‘capture latency’ or ‘player latency,’ only account for lag introduced at a specific step of the streaming workflow.

How Is Glass-to-Glass Latency Measured?

Measuring glass-to-glass latency is easy. Open a stopwatch program on your computer screen and film it with a camera — sending the stream through your entire workflow (encoder, transcoder if using, CDN, and player). Next, open up your player on the same computer screen and take a screenshot with the two windows.

The screenshot should include both the stopwatch being captured and the live stream of the stopwatch being played back. You can subtract the time shown on the video from the time shown on the stopwatch proper to get a better-than-ballpark measurement. For a more accurate reading, do this experiment several times and take an average.

What Is Considered Low Latency?

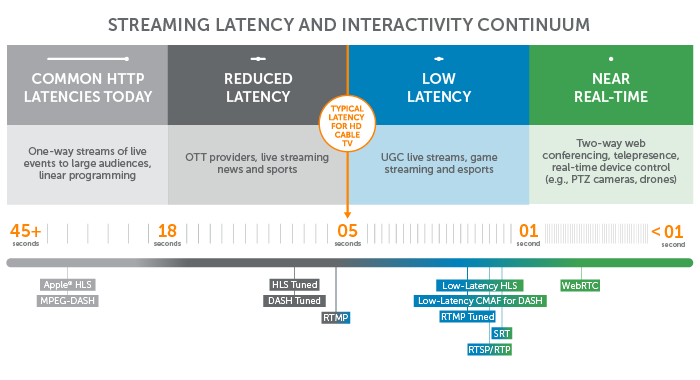

You’ll want to differentiate between the multiple categories of latency and determine which is best suited for your streaming scenario. These categories include:

- Near real time for video conferencing and remote devices

- Low latency for interactive content

- Reduced latency for live premium content

- Typical HTTP latencies for linear programming and one-way streams.

You’ll notice that the more passive the broadcast, the higher it’s acceptable for latency to climb.

You’ll also notice in the chart above that the two most common HTTP-based protocols, HLS and MPEG-DASH, are at the high end of the spectrum. So why are the most popular streaming protocols also sloth-like when it comes to measurements of glass-to-glass latency?

Due to their ability to scale and adapt, HTTP-based protocols ensure reliable, high-quality experiences across all screens. This is achieved with technologies like buffering and adaptive bitrate streaming — both of which improve the viewing experience by increasing latency.

High resolution is great. But what about scenarios where a high-quality viewing experience requires lightning-fast delivery? For some use cases, getting the video where it needs to go quickly is more important than 4K resolution.

Who Needs Low Latency?

When streaming sporting events, we’d recommend a glass-to-glass latency of around five seconds — this is referred to as reduced latency. Any longer than that and the cable broadcasters you’re competing against will be one step — or touchdown, goal, home run, you name it — ahead of you.

For developers trying to integrate live video into interactive solutions — such as trivia apps, live-commerce sites, e-sports platforms, etc. — sub-five-second latency is crucial. This puts you in the low latency range.

The fastest delivery speed is reserved for two-way conferencing, military-grade bodycams, remote-control drones, and medical cameras. Any latency north of one second would make these streaming scenarios awkward at best, disastrous at worst. Here’s where near real time reigns supreme.

If your streaming application falls outside of the use cases described above, it’s probably wise not to prioritize latency at all. That’s because configuring streams for speedy delivery only adds additional complexity that’s not always necessary.

Latency Across the Workflow

As we said, latency can be introduced at each area of the live-streaming workflow.

The primary bottlenecks include:

- Segment Length

- Player Buffering

- Encoding Efficiency

- Packaging and Protocols

- Delivery and Network Conditions

1. Segment Length

With HTTP-based streaming, there’s an inherent latency because the video data is sent in segments rather than as a continuous flow of information. This makes for a super stable streaming experience, but means that playback doesn’t start until the entire segment is downloaded.

Up until 2016, Apple recommended using ten-second segments for HLS. They’ve since decreased that to six seconds, but that still means that the ‘live’ stream will lag behind from the get-go. DASH and Microsoft Smooth provide shorter recommendations for segment length, but as we’ll see in the next section, this delay multiplies, and segment length isn’t the only cause of stream congestion.

2. Player Buffering

Most players are programmed to receive at least three separate segments before initiating playback of streams delivered via HTTP. This is done using a buffer, whereby the player stores data in its memory space to anticipate any bandwidth drops.

Buffering helps ensure that your video continues to play despite changes in connectivity, but it also generates the most latency. To take the traditional ten-second HLS segments as an example, collecting three segments prior to playback would equate to 30 seconds of latency off the bat.

3. Encoding Efficiency

Bitrate, resolution, which codec you use, and even segment size impact the speed of video encoding. The higher the bitrate and resolution, the longer encoding will take.

Additionally, while reducing segment size helps reduce overall latency, it also results in a longer encoding process. For this reason, content distributors often reduce the bitrate when using smaller segment size to improve encoding efficiency.

4. Packaging and Protocols

Much of this article has focused on HTTP-based streaming, but other alternatives exist when low latency is what you’re after.

Traditional streaming protocols such as RTSP and RTMP support low-latency streaming. But they aren’t supported by many players. Many broadcasters choose to transport live streams to their media server using RTMP and then transcode it for multi-device delivery.

There are also a number of burgeoning technologies designed for decreasing latency. These include:

| Protocols | Benefits | Limitations |

| WebRTC | Real-time interactivity without a plugin. | Not well-suited for scale or quality. |

| SRT | Smooth playback with minimal lag. | Playback support still isn’t widespread. |

| Low-Latency CMAF for DASH | Streamlined workflows and decreased latency. | Still gaining momentum across the industry. |

| Apple Low-Latency HLS | Supported by Apple. | Spec was just announced and vendors are working to support it. |

5. Delivery, Network Conditions, and Security

Imagine you’re planning a trip by plane. You know that distance alone means a flight from New York to Japan will take longer than a flight from New York to California. You also know that poor conditions can cause weather delays regardless of how close your destination is.

Streaming data follows the same paradigm. The farther your viewers are from the media server, the longer it takes to distribute a stream. And just as the security line at the airport can be a drag, digital rights management (DRM) slows down delivery.

Content delivery networks (CDNs) are critical for low-latency delivery, and protocols like SRT can help speed things up despite suboptimal networks.

Glass-to-Glass Latency Summarized

Confirm that any streaming vendor promising you a certain amount of latency is speaking in terms of glass-to-glass latency. Because, as you’ve seen, there’s ample opportunity to inject more delay between the broadcaster and viewer along the way.

We’re working to solve the HTTP Low Latency puzzle. Our engineers are working to support low-latency CMAF for DASH and Apple Low-Latency HLS. Once available, these technologies should achieve 2-3 second glass-to-glass latency.