3 Takeaways From Demuxed 2021

Streaming nerds from every corner of the world convened at Demuxed 2021 a couple of weeks back, a video engineering event that’s never short on Big Buck Bunny cameos, acronyms, and hyper-technical talks. Demuxed has been held virtually throughout the pandemic, but luckily the conference is focused on the very technology that makes today’s virtual events possible: live video streaming.

This year’s event included talks of every variety — ranging from practical to peculiar — with a handful of themes popping up more than once. Here’s my list of three takeaways from Demuxed 2021.

1. Advancement in WebRTC Broadcasting

As Millicast’s Ryan Jesperson pointed out early in the conference, “Let’s face it, the history between WebRTC and the broadcast world has not exactly been a love affair… WebRTC has not [historically] been perceived as a viable option for streaming media.”

Jesperson was, of course, referring to Web Real-Time Communications, the open-source technology designed for peer-to-peer video and audio exchange at lightning speed.

WebRTC has always been a great solution for browser-based video capture and encoding. But for content distributors wanting to stream produced live content with a professional encoder, it’s presented limitations. That’s because the real-time format lacks a standard signaling protocol, making communication between encoders and media servers leveraging WebRTC a struggle.

And so, the Millicast team designed the WebRTC HTTP Ingest Protocol (WHIP) to overcome this obstacle. WHIP provides encoding software and hardware with a simple way to accomplish the WebRTC negotiation process by using an HTTP post to exchange the SDP information with media servers, thus enabling WebRTC ingest across vendors.

Other interesting WebRTC applications included a talk on holographic video calling by Facebook’s Nitin Garg and Janet Kim’s presentation on building an interactive conferencing platform in thirty days.

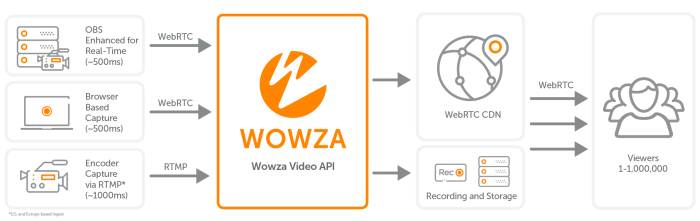

WebRTC adoption will continue to grow as vendors work to improve its usability for live video broadcasting, including support for alternate codecs and features such as HDR. It’s also gaining traction as a video delivery format due to improvements in scalability. At Wowza, WebRTC is the underlying technology powering our Real-Time Streaming at Scale feature for Wowza Streaming Cloud, and we’re building WHIP support into our future OBS implementation for the feature.

Workflow: Real-Time Streaming at Scale

2. Monitoring, Observability, and Quality of Experience (QoE) Metrics

Pinpointing the source of streaming issues has always been a challenge. Perhaps your stream is slow to start or, worse, fails to play altogether. Multiple different vendors are often integrated into a streaming workflow, so end-to-end visibility doesn’t come easy.

DataZoom’s Josh Evans explained in his talk, “It could be an issue with the player, some problem with its buffering algorithm or bitrate selection. It could be an issue with content encoding or packaging. In terms of real-time delivery for VOD, it could just be a slow or failing origin, or a slow or failing CDN cache or set of caches. It could also be the network. Anywhere in between any of these nodes in the network, there could be a problem that’s creating latency.”

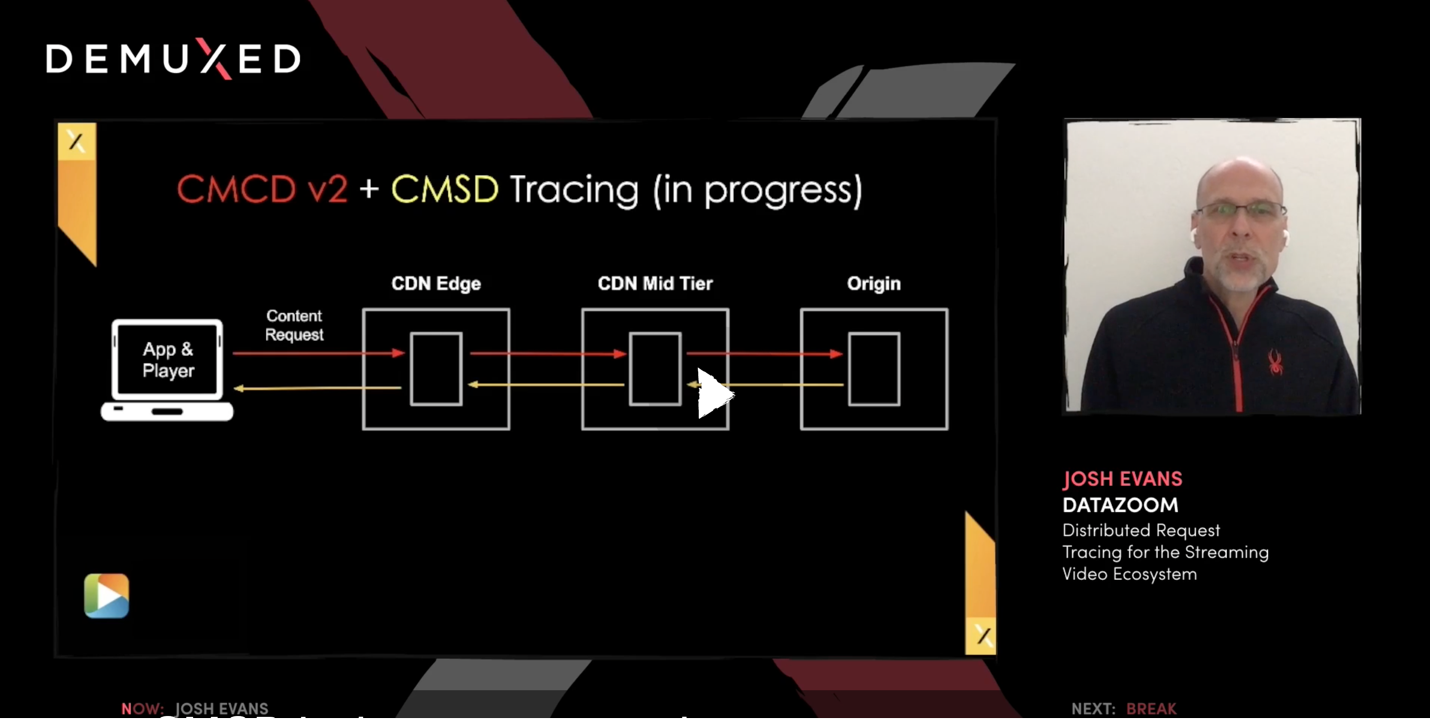

And unless you’re an engineer at Netflix, chances are your streaming ecosystem is made up of disparate systems and vendors. The first hurdle is collecting all the data and putting it into a standardized format. There is a project underway in the Streaming Video Alliance Quality of Experience (QoE) Working Group and the Consumer Technology Association (CTA) to build out a POC to address this challenge using distributed request tracing with Open Telemetry and two emerging specifications:

- Common media client data (CMDC): “A simple means by which every media player can communicate data with each media object request and have it received and processed consistently by every CDN.”

- This follows the request path in red below, from player to origin.

- Common media server data (CMSD): “A standard means by which every media server can communicate data with each media object response and have it received and processed consistently by every player and intermediate proxy server, for the purpose of ultimately improving the quality of experience enjoyed by end users.”

- This follows the response path in green below, from origin back to player.

The project goal is to leverage tracing, CMDC, and, ultimately, CMSD to improve communication and interoperability across a streaming workflow by normalizing metrics. But once the data has been correlated from end to end, visualization is needed to leverage it in a meaningful way. A talk given by Sean McCarthy of ViacomCBS focused on this next challenge. Specifically, he talked about Visor, the dashboard used for real-time monitoring during the Super Bowl.

The combination of technologies like CMDC and distributed tracing, along with real-time interfaces for acting on this data, show a lot of promise in improving stream monitoring and observability today. We recently published a blog at Wowza on using health metrics to troubleshoot streaming issues and are continuing to enhance monitoring and observability across our platform.

3. Video Artificial Intelligence (AI)

The use cases for video artificial intelligence (AI) are countless. From object detection to emotion analysis, AI enables today’s broadcasters to act faster, smarter, and more effectively. Two presentations at Demuxed focused on video AI: Ali Begen’s exploration of content-aware adaptive playback for low-latency streaming and Eric Tang’s deep dive on content classification.

More specifically, Begen discussed adapting the playback of live sports broadcasts. By using an algorithm to identify the most interesting parts of a streaming event (e.g., the game-winning shot) broadcasters can maintain smooth, low-latency playback when it matters most. This is done by applying speed-up and slow-down mechanisms to less critical (and thus less noticeable) parts of the broadcast.

With this technique, a stream can buy time or quickly catch up via speed changes applied to static scenes where nobody is talking. As a result, low latency is maintained throughout and buffering is avoided altogether. This same approach benefits video on demand (VOD) content as well, ensuring a higher-quality experience when watching a movie on Netflix, for instance.

Another application for AI was detailed in Tang’s talk, which looked at using content classification for live streams to detect fraudulent activity. Tang’s demo is available as an open-source project for you to experiment with your own training set and your own environment.

“Sometimes you only realize after the fact that someone has used your platform to stream something like a Premier League soccer game that then went out to thousands of tens of thousands of viewers on the internet… And this happens quite often in today’s UGC-centric live streaming world.”

Not only does AI-powered content classification shows promise for fraud detection, it’s able to replace manual processes and thus do so at scale. These are just a few ways AI can be utilized in video workflows, which is a very exciting area for research and innovation. At Wowza, we’re constantly looking at ways technology like this can be used to enhance our platform.

Conclusion

It’s impossible to do justice to the breadth and depth of talks given at this year’s conference in one article, so be sure to take a look at 2021.demuxed.com for a complete list of presentations. The Demuxed team will be uploading the edited talks to YouTube later this year, and are encouraging anyone chomping at the bit to contact them via email for advanced access.

FREE TRIAL

Live stream and Video On Demand for the web, apps, and onto any device. Get started in minutes.

- Stream with WebRTC, HLS and MPEG-DASH

- Fully customizable with REST and Java APIs

- Integrate and embed into your apps