HLS Latency Sucks, But Here’s How to Fix It (Update)

Apple’s HTTP Live Streaming (HLS) protocol is the preferred format for video delivery today, although it’s not without its shortcomings, namely latency. Still, streamers flock to it for its reliability and compatibility with a myriad of devices. As concerns over latency grew, Apple answered the call and developed a low-latencyextension. Low-latency HLS (LL-HLS) makes the HLS protocol a viable solution for streamers looking for a more real-time experience.

In this article, we’ll explore the strength and weaknesses of both HLS and LL-HLS, how to adapt traditional HLS when LL-HLS is not an option, and how they stack up against other speedy alternatives.

Table of Contents

Understanding the HLS Protocol

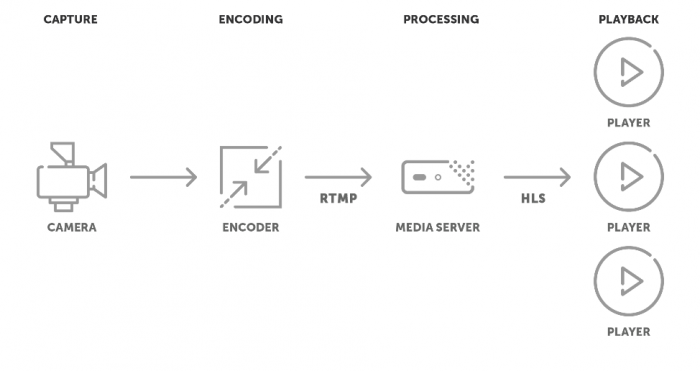

Like other egress streaming protocols, HLS transports audio and visual media from a media server through last-mile delivery to a playback device. HLS breaks this media up into a series of streaming segments, each compressed separately and sent in a consistent stream for smooth delivery. Other streaming protocols, like Dynamic Adaptive Streaming Over HTTP (MPEG-DASH), work similarly. However, what sets HLS apart is its widespread compatibility.

This is because HLS was developed by Apple, a company notorious for restricting its devices to proprietary technology. As a result, just about any device can stream HLS, but Apple devices will only stream HLS. In this way, HLS’s popularity is a product of necessity for anyone wanting to reach Apple playback devices.

How HLS Works

Typically, HLS is the second half of a hybrid RTMP-to-HLS workflow. Raw media is encoded and sent via RTMP to a media server where it is transcoded into HLS media segments, aka chunks. These chunks may include multiple bitrate variants for adaptive bitrate (ABR) streaming.

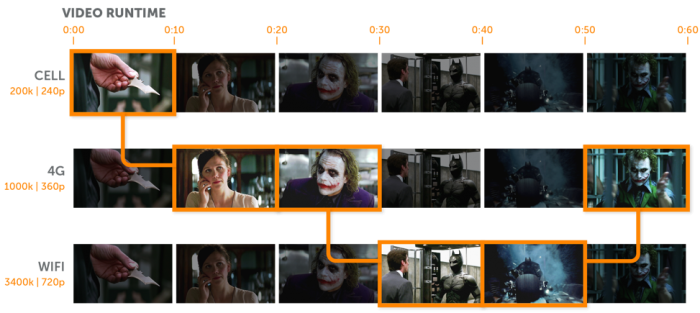

The media server organizes these chunks into a playlist or manifest and cuts and then reassembles video chunks based on that manifest (HLS uses .m3u8 playlist) file. This process, called preloading, guarantees the chunks are delivered in the correct order. It also allows the media server to prepare chunks in accordance with ABR feedback. In other words, as it sends chunks to a given playback device at a certain video bitrate, it continuously receives feedback about said device’s available bandwidth. As such, it may select ensuing chunks at a higher or lower bitrate to ensure optimal video quality.

By chunking the media with ABR variants, HLS can reliably reach a wider audience with a high-quality stream. However, the process also slows the stream down, causing latency.

Introducing Low-Latency HLS

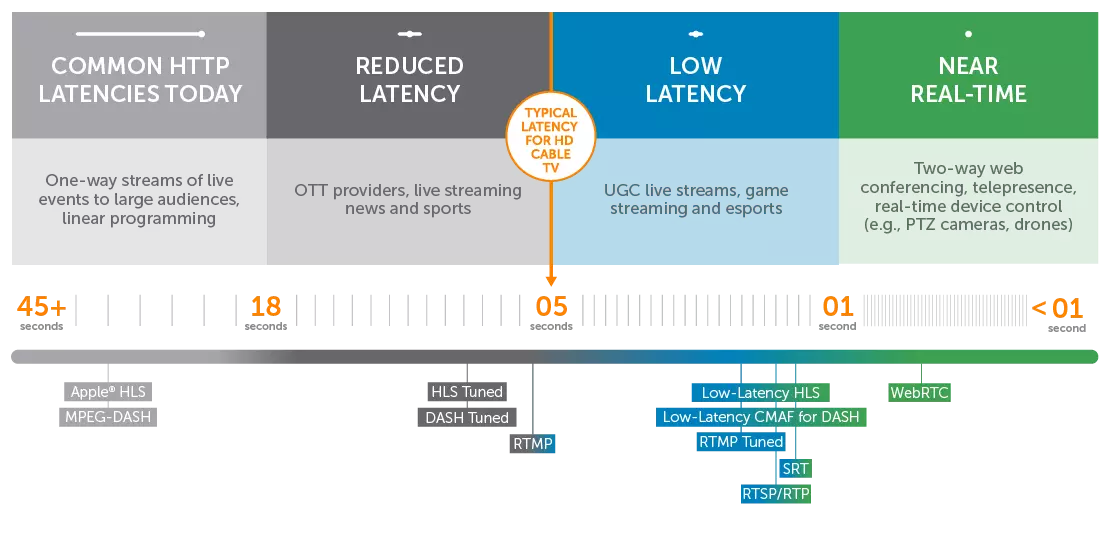

Apple’s LL-HLS is a protocol extension first announced in 2019, a decade after the HLS protocol’s initial release. In 2020, Apple fully incorporated LL-HLS into the broader HLS standard. Since then, it’s been a viable albeit still developing option for those who enjoy the scalability and adaptability of HLS but not the sluggishness. This protocol extension brings HLS latency from upwards of 45 seconds to just two or three in part due to taking the video segments and further breaking them down into partial segments for quicker delivery.

Benefits of the HLS Protocol

Remember when streaming video online meant constant buffering? The HLS streaming chunks described above solve that problem, ensuring your stream can be played back seamlessly, in high quality, without causing the spinning ball of death.

Beyond that, HLS reduces the cost of delivering content. Using affordable HTTP infrastructures, content owners can easily justify delivering their streams to online audiences and expand potential viewership. HLS is also ubiquitous – ensuring playback on more devices and players than any other technology – which means that it’s a convenient technology to use that doesn’t require specialized workflows.

Regardless of whether viewers are using an iOS, Android, HTML5 player, or even a set-top box (Roku, Apple TV, etc.), HLS streaming is available. It also scales well thanks to its packetized content distribution model. Additionally, it provides video content delivery networks (CDNs) and streaming service providers with a relatively common platform to standardize across their infrastructure, allowing for edge-based adaptive bitrate (ABR) transcoding.

Concerns Over HLS Latency

HLS boasts unmatched compatibility across devices, high-quality video, and scalability to countless viewers. But while HLS surges in popularity, we continue to hear complaints from customers about reducing latency. The general feedback is: “HLS latency sucks.”

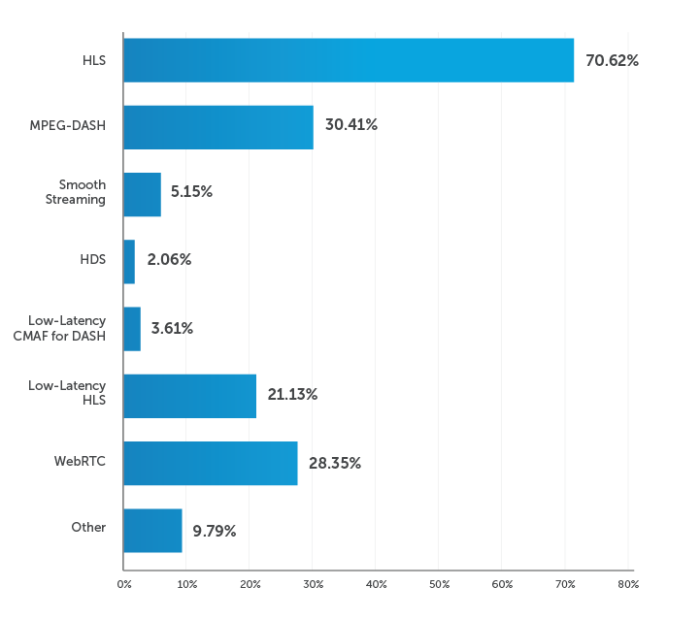

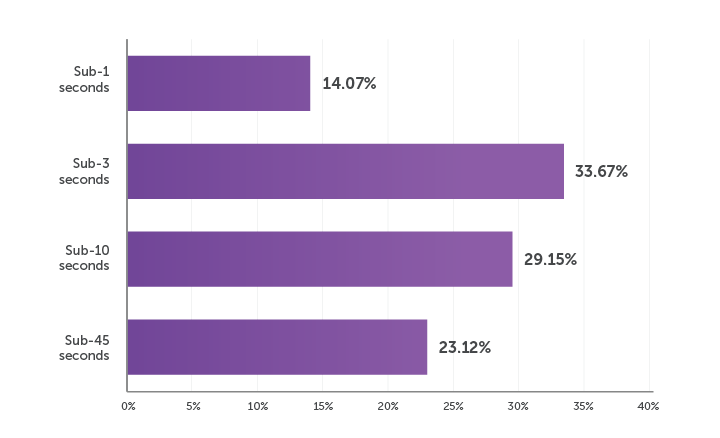

Just peek at the following two charts. More than 70% of respondents in our 2021 Video Streaming Latency Report indicated that they use HLS — with more than 23% experiencing latency in the 10-45 second range.

Which streaming formats are you currently using?

How much latency are you currently experiencing?

Lag time aside, HLS has a lot of things going for it. And it can’t be ignored as a viable option for your streaming workflow.

Get the low latency streaming guide

Understand the critical capabilities required to provide interactive live streaming experiences.

Download FreeCauses of Latency With HLS

For many of the same reasons that HLS is great, it drags its heals when it comes to delivery speed. The sources of HLS latency include the encoding, transcoding, distribution, and default playback buffer requirements for HLS.

When streaming with HLS, Apple recommends a six-second chunk size (also called segment duration) and a certain number of packets to create a meaningful playback buffer. This results in about 20 seconds of glass-to-glass delay. Plus, when you introduce CDNs for greater scalability, you inject another 15-30 seconds of latency so the servers can cache the content in-flight — not to mention any last-mile congestion that might slow down a stream.

For these reasons, standard HLS isn’t a viable option when interactivity or broadcast-like speed matters. Nobody wants to see spoilers in their phone’s Twitter feed before the ‘live’ broadcast plays a game-winning touchdown. Likewise, large delays interrupt the two-way nature of game streaming and user-generated content (UGC) applications like Facebook Live or Twitch. Consumers today expect their content to arrive as fast as possible, regardless of the realistic nature of the streaming app.

Tuning HLS for Low Latency

One option for streaming lower-latency Apple HLS content is to tune your workflow. Using the Wowza Videoplatform or Wowza Streaming Engine, users can manipulate the segment size for reduced-latency HLS streams. Below, we provide a tutorial for the latter.

Steps for Reducing Latency in Wowza Streaming Engine

When delivering lower-latency HLS streams in Wowza Streaming Engine, there are four settings you’ll want to modify. We walk through each in this video, and we go into more detail in the list below.

- Reduce your HLS chunk size. Currently, in the HLS Cupertino default settings, Apple recommends a minimum of six seconds for the length of each segment duration. We have seen success manipulating the size to half a second. To reduce this, modify the chunkDurationTarget to your desired length (in milliseconds). HLS chunks will only be created on keyframe boundaries, so if you reduce the minimum chunk size, you need to ensure it is a multiple of the keyframe interval or adjust the keyframe interval to suit.

- Increase the number of chunks. Wowza Streaming Engine stores chunks to build a significant buffer should there be drop in connectivity. The default value is ten, but for reduced-latency streaming, we recommend storing 50 seconds of chunks. For one-second chunks, set the MaxChunkCount to 50; if you’re using half-second chunks, the value should be 100.

- Modify playlist chunk counts. The number of items in an HLS playlist defaults to three, but for lower latency scenarios, we recommend 12 seconds of data to be sent to the player. This prevents the loss of chunks between playlist requests. For one-second chunks set the PlaylistChunkCount value to 12; if you’re using half-second chunks, the value should be doubled (24).

- Set the minimum number of chunks. The last thing you want to adjust is how many chunks must be received before playback begins. We recommend a minimum of 6 seconds of chunks to be delivered. To configure this in Wowza Streaming Engine, use the custom CupertinoMinPlaylistChunkCount property. For one-second chunks, set it to 6, or 12 for half-second chunks.

Risks of Tuning HLS for Latency

Tuned HLS comes with inherent risks. First, smaller chunk sizes may cause playback errors if you fail to increase the number of seconds built into the playlist. If a stream is interrupted, and the player requests the next playlist, the stream may stall when the playlist doesn’t arrive.

Additionally, by increasing the number of segments that are needed to create and deliver low-latency streams, you also increase the server cache requirements. To alleviate this concern, ensure that your server has a large enough cache or built-in elasticity. You will also need to account for greater CPU and GPU utilization resulting from the increased number of keyframes. This requires careful planning for load-balancing, with the understanding that increased computing and caching overhead incurs a higher operation cost.

Lastly, as HLS chunk sizes are smaller, the overall quality of the video playback can be impacted. This may result in either not being able to deliver 4K video reaching the player, or small playback glitches with an increased risk of packet loss. Essentially, as you increase the number of bits (markers on the chunks), you require more processor power to have a smooth playback. Otherwise, you get packet loss and interruptions.

Become a Video Streaming Expert

Learn about codecs, protocols, the latest live streaming trends, and much more.

SubscribeAlternative Formats for Low-Latency Streaming

Luckily, there are a few lower-risk alternatives for speeding things up: the aforementioned Apple Low-Latency HLS, Web Real-Time Communications (WebRTC), and Low Latency CMAF for DASH (LL-DASH).

Apple Low-Latency HLS

Apple’s Low-Latency HLS protocol was designed to achieve roughly two-second latencies at scale — while also offering backwards compatibility to existing clients.

Pros

Backwards compatibility is a major benefit of Low-Latency HLS. Players that don’t understand the protocol extension will play the same streams back, with a higher (regular) latency. This allows content distributors to leverage a single HLS solution for optimized and non-optimized players.

Low-Latency HLS promises to put streaming on par with traditional live broadcasting in terms of content delivery time. Consequently, we expect it to quickly become a preferred technology for OTT, live sports, esports, interactive streaming, and more.

Cons

The Low-Latency HLS spec is still evolving, and support for it is being added across the streaming ecosystem. As a result, large-scale implementations remain few and far between. It also lags behind WebRTC in terms of delivery speed. For the lowest-latency possible, you’ll be better off swapping out HLS altogether.

Low-Latency CMAF For DASH

MPEG-DASH is, like HLS, an adaptive HTTP-based protocol that streams in segments and can utilize ABR streaming. However, unlike HLS, MPEG-DASH is not proprietary and suffers from its lack of association with Apple, making it less widely supported.

Low-Latency Common Media Application Format (CMAF) for DASH is its speedy alternative to MPEG-DASH. However, it’s a bit more complicated than just a protocol extension. CMAF is a newer media format for packaging HTTP-based media, seeking to standardize streaming and improve cross-compatibility between HLS and DASH. CMAF’s standardization of reduction of chunk sizes promotes lower latency. When MPEG-DASH utilizes the CMAF standard for media packaging and delivery, you get Low-Latency CMAF for DASH, also called Low-Latency MPEG-DASH.

Pros

CMAF helps to level the playing field between HLS and DASH, reducing the usual concerns regarding compatibility. At three-second or so latency, LL-CMAF for DASH is also much faster than traditional HLS and comparable to LL-HLS. So why might you select this option over LL-HLS? For starters, as a non-proprietary technology, it’s codec agnostic. In other words, you are not limited to specific audio or video codes for compressing your media files.

Cons

Also like LL-HLS, LL-CMAF for DASH is not the fastest option available. You continue to be limited to a few seconds of latency. For truly real-time streaming, you should consider WebRTC.

WebRTC

WebRTC delivers near-instantaneous streaming to and from any major browser. The technology was designed for video conferencing and thus supports sub-500-millisecond latency – without requiring third-party software or plug-ins.

Pros

Everything from low-latency delivery to interoperability makes WebRTC an attractive, cutting-edge technology. All major browsers and devices support WebRTC, making it simple to integrate into a wide range of apps without dedicated infrastructure. It’s also the quickest method for transporting video across the internet, as mentioned above.

Cons

While it’s the speediest protocol out there, scaling to more than 50 concurrent peer connections requires additional resources. Additionally, it lacks some of the adaptability of LL-HLS and LL-CMAF for DASH. Where they can utilize ABR, WebRTC has less nuanced options, like WebRTC simulcast and scalable video coding (SVC). Luckily, we designed Real-Time Streaming at Scale for Wowza Video to overcome scalability and adaptability limitations. The new feature deploys WebRTC across a custom CDN using WebRTC simulcasting to support quality interactive streaming to a million viewers.

HLS Alternatives Comparison Chart

| LL-HLS | LL-CMAF for DASH | WebRTC | |

|---|---|---|---|

| Latency | Roughly 2 seconds | Roughly 2 seconds | Sub-second |

| Scalability | Highly scalable | Scalable to limited devices | Scalable with additional infrastructure |

| Compatibility | Highly compatible | Not available to Apple devices | Highly compatible with additional resources |

| Adaptability | ABR streaming option | ABR streaming option | WebRTC simulcasting and SVC option |

Conclusion

No matter what your latency requirements are, Wowza makes it happen. Out full-service platform can power any workflow – with reliability to boot. We offer protocol flexibility on the ingest side as well as delivery, meaning you’re able to design the best streaming solution for your use case rather than sticking with one prescriptive workflow.